Parasocial 2.0: Falling for a Bot—Healthy or Harmful?

Some links are affiliate links. If you shop through them, I earn coffee money—your price stays the same.

Opinions are still 100% mine.

Hey There, Tom here. The question that keeps blowing my mind: What happens when your closest friend is an algorithm? A few years ago, falling for a bot sounded like sci-fi. Now millions use customized AI companions, and experts call this wave "Parasocial 2.0".

We’ve moved from one-way crushes on celebrities to 24/7 companions we design ourselves. This article asks whether these AI relationships are healthy or harmful—and how to keep boundaries if you choose to engage.

What Is Parasocial 2.0?

Classic parasocial bonds were one-sided attachments to media figures who never knew we existed. Parasocial 2.0 is interactive: AI companions learn from you, adapt to your cues, and mirror your preferences, creating the feeling of reciprocity even though the “relationship” remains algorithmic.

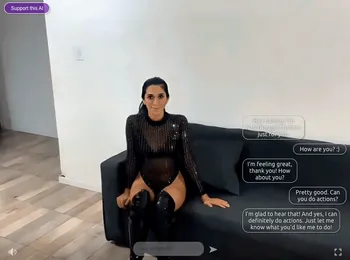

Platforms like Character.AI or Janitor AI let you design personality, backstory, and appearance, making the bond feel bespoke and responsive compared to traditional celebrity crushes.

Why People “Fall for” Bots

People get attached because bots are endlessly available, never judgmental, and highly customizable. Memory systems recall past chats, creating continuity. 24/7 access, tone-matching, and tailored personalities deliver emotional availability that can feel safer than human relationships.

Potential Benefits (When It Can Be Healthy)

Used mindfully, AI companions can support skill-building and mood regulation. They offer practice for communication, a stigma-free place to vent, and steady encouragement when human support isn’t available.

| Advantage | Benefit |

|---|---|

| Combatting Loneliness | 24/7 conversation can reduce isolation when other support is unavailable. |

| A Place to Open Up | Non-judgmental space to process feelings and rehearse difficult conversations. |

| Developing Communication Abilities | Low-stakes practice for empathy, listening, and self-expression. |

| Emotional Encouragement | Consistent positive reinforcement and reframing during stressful periods. |

| Available 24/7 | Responsive support at any hour, something human networks may not provide. |

Risks and Red Flags (When It Turns Harmful)

The same traits that feel comforting can create traps. Watch for dependency, avoidance of real relationships, and pressure to keep paying for access or tokens. Remember that privacy risks are real—your chats may fuel ads or model training.

- Dependency & Isolation: Choosing bots over people most days, skipping social plans, or feeling anxious when logged off.

- Boundary Confusion: Treating algorithmic responses as genuine consent or emotional reciprocity.

- Pay-to-Chat Pressure: Feeling pushed to spend to maintain “closeness” or unlock NSFW/media features.

- Privacy/Data Risks: Sharing intimate details that could be retained, sold, or breached.

- Unrealistic Expectations: Comparing human partners to always-agreeable AI personas.

Healthy Boundaries and Best Practices

Set intentional guardrails so AI remains a supplement, not a substitute:

- Time budgets: Cap daily or weekly usage; schedule device-free blocks.

- Diversify connections: Pair AI chats with human touchpoints each week.

- Clarify goals: Decide if you’re practicing conversation, mood regulation, or simply unwinding.

- Privacy hygiene: Avoid PII, use in-app privacy settings, and clear histories when possible.

- Content filters: Enable safety filters if available; adjust NSFW settings to your comfort.

- Plan off-ramps: Take breaks, especially after intense sessions, and notice mood shifts afterward.

When to Seek Help

Consider talking to a professional if you feel distressed when away from the bot, hide your usage, spend beyond your means to stay connected, or withdraw from friends and work. In crisis, contact local emergency services or recognized hotlines (e.g., national suicide prevention lines) for immediate support.

Further Reading and Alternatives

Explore platform-specific pros and cons in our in-depth reviews: Candy.ai Review (2025), Sweetdream.ai Review 2025, and Nomi.ai Review (2025). For boundary-setting guidance, see our cornerstone guide on setting boundaries with your AI partner.